Deploying a centralized logging system using ELK Stack with Redis (Managed by Kubernetes)

When coming to developing a system and publish it to the cloud platforms; it has to be delicate enough that the micro-services/mini services works together with harmony and coherence; well of course without any fail-over or any issue at all. But even though establishing a good cloud infrastructure is mandatory, our system should have a fine tuned logging system that we can rely on to monitor which service is down; or better say which micro-service is behaving in a weird way. Not only that; having a centralized logging system will also help developers not to rely on DevOps to get log data(s) when something happened. Everything should be automated in a sense that anyone that needs logs should see it with an appropriate restriction in the logging system.

Which Stack Do We Choose?

When coming to choosing a best stack for centralized logging system; we should consider and choose in many aspects or criteria's like if the stack we're going to use is speedy, open source or commercial, a third party service (usually hosted in the cloud to operate the logging services) ... etc. I chose ELK stack because it has a lot of advantages over the others. For more info why do we choose ELK stack; check this Link out. For now i'm more interested in implementing ELK stack in Kubernetes in a simple and easy way; of course with the addition of Redis for controlling the traffic flow of data from micro-services to Logstash; thus in another term it will protect Logstash to be more stable (act as a pipeline queue) and safe from buffer overflow of incoming data(s).

Before We Begin

I usually use DigitalOcean's kubernetes cluster for testing purposes but you can also use MiniKube for testing it in your localhost. When coming to separating objects of kubernetes; it's better to label the yaml files. For example: When creating a namespace object; i usually create a file called k8s-namespace.yaml in a parent directory named "logging"; the parent name also indicates what infrastructure yaml file it includes; which in our case is for logging purpose.

Let's Roll

1: Creating our Namespace and Elastic Search Service

1.1: Let's Create the Namespace yaml file.

N.B. The initial comment is the name of the file.

# k8s-namespace.yaml

kind: Namespace

apiVersion: v1

metadata:

name: elk-stack1.2: Creating an k8s object called StatefulSet yaml file.

Before we proceed; StatefulSet Object is chosen here because it will assign unique network identifier for each and every pod that manages it, it has also stable and persistent storage. The cool thing about this object is, even if the statefulSet object is deleted/destroyed, storage will be available no matter what ( for security reasons). Here is a Link if you want to know more about StatefulSet object.

N.B: Notice that our elasticsearch image has a configured password called "changeme".

# k8s-elasticsearch-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: elk-stack

spec:

serviceName: elasticsearch

replicas: 1

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.8.0

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: network.host

value: 0.0.0.0

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch"

- name: cluster.initial_master_nodes

value: "es-cluster-0"

- name: xpack.license.self_generated.type

value: "trial"

- name: xpack.security.enabled

value: "true"

- name: xpack.monitoring.collection.enabled

value: "true"

- name: ES_JAVA_OPTS

value: "-Xms256m -Xmx256m"

- name: ELASTIC_PASSWORD

value: "changeme"

initContainers:

- name: fix-permissions

image: busybox

command:

["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: do-block-storage

resources:

requests:

storage: 5Gi

1.3: Headless Service For StatefulSet Object

Our third step will reside in having a headless service for our SatefulSet object. That is because StatefulSet requires Headless Service to be operational.

# k8s-elasticsearch-svc.yaml

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: elk-stack

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node1.4: Running our finished "ElasticSearch" service only.

Use this command to run those yaml files that we've created up to now.

kubectl apply -f k8s-namespace.yaml -f k8s-elasticsearch-svc.yaml -f k8s-k8s-elasticsearch-statefulset.yaml2: Creating Our Redis and Logstash Services

2.1 Redis Implementation in our kubernetes yaml file.

Now that we have elasticsearch service running and working properly; let us create our Redis instance.

N.B. Service object is included for the sake of simplicity.

# k8s-redis.yaml

kind: Service

apiVersion: v1

metadata:

name: redis

namespace: elk-stack

labels:

app: redis

spec:

ports:

- port: 6379

selector:

app: redis

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis

namespace: elk-stack

labels:

app: redis

spec:

replicas: 1

selector:

matchLabels:

app: redis

template:

metadata:

labels:

app: redis

spec:

containers:

- name: redis

image: bitnami/redis:latest

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: REDIS_PASSWORD

value: my_redis_password

ports:

- containerPort: 63792.2 Logstash Implementation

Propagating log data through Redis is not enough, thus Logstash is required and is because it's responsible to pull data from Redis and feed it to Elastic Search service. Here is the yaml file implementation.

Notice that we also have a ConfigMap and Service (type: ClusterIP) objects for configuring and networking respectively.

# k8s-logstash.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-config

namespace: elk-stack

data:

logstash.conf: |-

input {

redis {

host => "redis"

password => "my_redis_password"

key => "my_key_logs"

data_type => "list"

}

}

output {

elasticsearch {

hosts => "elasticsearch:9200"

user => "elastic"

password => "changeme"

index => "logstash-%{+YYYY.MM.dd}"

sniffing => false

}

}

logstash.yml: |-

http.host: "0.0.0.0"

path.config: /usr/share/logstash/pipeline

xpack.monitoring.enabled: false

xpack.monitoring.elasticsearch.username: elastic

xpack.monitoring.elasticsearch.password: changeme

---

kind: Service

apiVersion: v1

metadata:

name: logstash

namespace: elk-stack

labels:

app: logstash

spec:

ports:

- port: 9600

selector:

app: logstash

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: logstash

namespace: elk-stack

labels:

app: logstash

spec:

replicas: 1

selector:

matchLabels:

app: logstash

template:

metadata:

labels:

app: logstash

spec:

containers:

- name: logstash

ports:

- containerPort: 9600

image: docker.elastic.co/logstash/logstash:7.8.0

volumeMounts:

- name: config

mountPath: /usr/share/logstash/config/logstash.yml

subPath: logstash.yml

readOnly: true

- name: pipeline

mountPath: /usr/share/logstash/pipeline

readOnly: true

command:

- logstash

resources:

limits:

memory: 1Gi

cpu: "200m"

requests:

memory: 400m

cpu: "200m"

volumes:

- name: pipeline

configMap:

name: logstash-config

items:

- key: logstash.conf

path: logstash.conf

- name: config

configMap:

name: logstash-config

items:

- key: logstash.yml

path: logstash.ymlTo run our Redis and Logstash service:

kubectl apply -f k8s-redis.yaml -f k8s-logstash.yaml3: Kibana Implementation

3.1: Kibana Implementation for a Dashboard view.

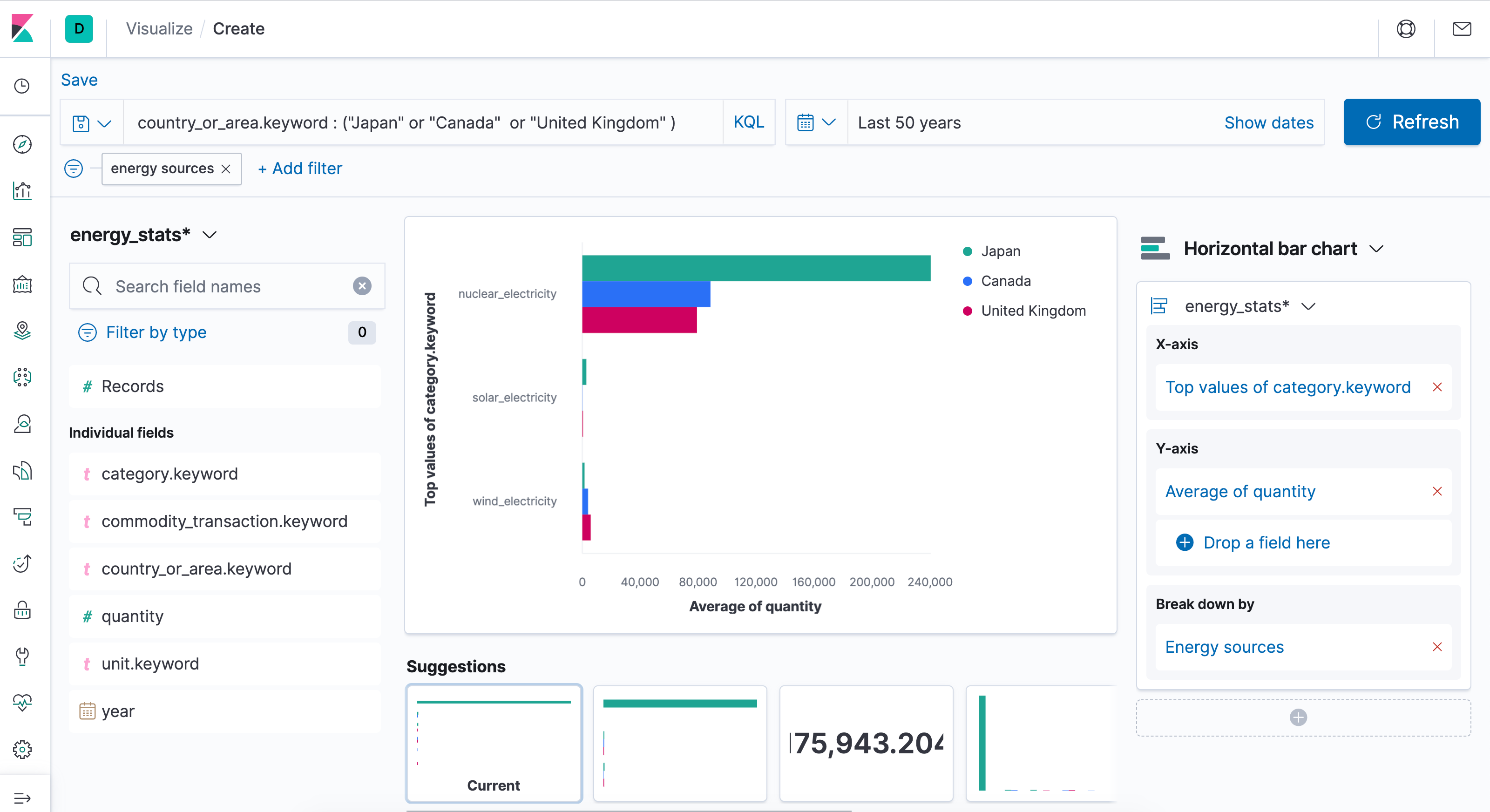

We've reached on our last implementation of kibana. Kibana is responsible to show what logged data are being stored in our elastic search service. Not only that; we'll have also the power to filter and see what's logged at what time and whatnot.

N.B. File have implementation of ConfigMap and Service object.

# k8s-kibana.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: kibana-config

namespace: elk-stack

data:

kibana.yml: |-

server.name: kibana

server.host: 0.0.0.0

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

elasticsearch.username: elastic

elasticsearch.password: changeme

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: elk-stack

labels:

app: kibana

spec:

ports:

- port: 80

targetPort: 5601

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: elk-stack

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.8.0

volumeMounts:

- name: pipeline

mountPath: /usr/share/kibana/config/kibana.yml

subPath: kibana.yml

readOnly: true

resources:

limits:

cpu: 1000m

requests:

cpu: 200m

ports:

- containerPort: 5601

volumes:

- name: pipeline

configMap:

name: kibana-config

items:

- key: kibana.yml

path: kibana.yml

3.2 Ingress for Kibana Service.

Now that we only have one service that face to the public which is our kibana service; let us create an Ingress object for that.

# k8s-ingress-kibana.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: kibana-ingress

namespace: elk-stack

spec:

rules:

- host: logs.example.com

http:

paths:

- backend:

serviceName: kibana

servicePort: 80I'll leave the "letsencrypt" implementation for the sake of brevity. But if you want to implement Kubernetes Ingress Controller (nginx type). Here's the Link for it. And that's how you easily run it in DigitalOcean:

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.35.0/deploy/static/provider/do/deploy.yaml

# Then spin-up our kibana service with the following command.

kubectl apply -f k8s-ingress-kibana.yaml -f k8s-kibana.yaml

If you're lazy to read all of my non sense 😉

Here is a my GitHub Repository link which contains all yaml files.